At Austin Ai, we have created and implemented state-of-the-art language models for recognizing named entities in texts. We used the very same technical foundations behind ChatGPT or Google’s BERT.

In this series of blogs, we’ll show you the key innovations behind Large Language Models (LLMs), as well as their various use cases and advantages.

We’ll also show how Austin Ai can help your firm implement these use cases and deliver solutions, while minimizing the risks of depending on major AI companies and servers and models over which you have no control.

Large language models (LLMs) are becoming an incredibly powerful and versatile tool, finding use cases in practically every professional and non-professional human endeavor.

These LLMs integrate potentially millions of diverse sources of information and media, all condensed into a single prompt.

You can leverage LLMs for everything from document writing to programming, with GitHub Copilot being a very powerful autocompletion tool.

A few prominent well-known vendors have achieved market dominance. And their models have some impressive capabilities. But it’s not always wise for companies to allow themselves to become over-reliant on outside companies for AI and LLM capabilities.

This is because it can be very risky to become excessively dependent on servers, policies, and algorithms over which you have no control.

For example, industry veterans may recall the devastating 2014 hacking attack on cloud-based code hosting service CodeSpace: An outside attacker deleted most of the company’s hosted data, backups, machine configurations, and even offsite server data were wiped out.

The company was wiped out as well, and soon completely ceased operations. Most of their customers lost most or all of the data they relied on CodeSpace to host.

If you rely entirely on LLM-as-a-service (such as ChatGPT), you also take on the same SaaS risks as any other software-as-a-service arrangement: The chance that your operations could be disrupted or worse by circumstances over which you have no control.

By understanding the key technologies involved and their capabilities and use cases, it’s possible to bring these key technologies in house. That is, you can create your own custom AI technology, designed specifically for your purposes, and that won’t be altered, canceled, or changed on you with little or no notice.

At Austin Ai, our focus is on helping you create and implement your own LLM asset, maintain meaningful operational independence from large AI companies, thereby reducing SaaS risk and the likelihood of outside business disruption.

Your LLM can become your own IP asset on your balance sheet that you control, that helps you achieve your own unique competitive advantages, and that can never be stripped away from you.

A large language model (LLM) is an artificial intelligence (AI) model built to understand, manipulate, predict, and generate human language. They’re built using a variety of deep learning techniques ––particularly neural networks and natural language processing––and are trained on vast datasets comprising text from various sources such as books, articles, and websites.

ChatGPT in particular was a breakthrough in the field of natural language processing––and sparked a revolution in computing and business.

ChatGPT, a large language model developed by OpenAI, was the first to be mass marketed to the public and adopted on a massive scale. But there are others, and competing models like Claude and Perplexity are following close on its heels.

But it was OpenAI’s ChatGPT that forever changed our perception of chatbot technology, as the performance expectations for any chatbot were raised to a new and exponentially higher level than anything on the market before.

The language fluency that LLMs enjoy, in particular chatGPT, was a breakthrough in the field of natural language processing and definitively marked a revolution. As the performance expectations for any chatbot surged to an unprecedented level, the concept of a chatbot underwent a permanent transformation.

So, what exactly are LLMs? Do they have memories? Can they learn? Before answering these questions, let us briefly sketch what is traditionally known as an AI model. In the figure below, you can observe a set of numbers, known as features, on the left, and an outcome on the right. This is a simple machine that classifies animals.

Here you can imagine features as some information about the animal. Things like its weight, color, height, length, etc. These are all features that are readily quantifiable into numbers.

But the input can also be a picture!

In that case, the numbers on the left would be the values of each pixel. Then the machine (the artificial network) tries to decide what animal the information corresponds to. The ability of the network to do this is achieved through a process that everybody already heard of: machine learning.

But what exactly is a neural network?

In simple terms, an artificial neural network is a computational model designed to mimic the way biological neural networks in the human brain process information. The artificial neural network is built to recognize patterns, classify data, assign probabilities, and make predictions.

In mathematical terms, you can think of neural networks as functions––albeit functions with a whole lot of parameters. These parameters are adjusted during the learning stage.

The function itself is where the so-called architecture of the network is encoded.

Now, you can’t pick just any function for any task. This is where selecting the correct neural network architecture is crucial: You have to carefully consider how the neural network is organized, and how it processes its input data.

The most popular architecture is the feed-forward neural network.

The feed forward network has a number of layers. Each layer is composed of a number of neurons, in such a network the information processing always flows in one direction, as in Figure 2 below.

The input neurons can be understood as “sensory” neurons. These are the neurons receiving external information.

Then inner or hidden neurons start processing the information.

They do this by taking the outputs of the previous layer, giving each data point the appropriate weighting, and summing the data according to its programming.

Later, the feed forward neural network could give a biased term (that is also trained), which encodes the preference of the neuron to be activated, regardless of the signal from the previous layer.

Next, the result is passed through a simple non-linear function producing the value of the signal of the neuron, which is passed to the next one.

Finally, the output neurons––the final layer in the multi-layer neural network––produce the final result.

The model’s output is usually interpreted as a probability. Therefore, either inside the neuron or as a part of the post-processing, the designer designates a threshold which decides the output result of the network.

For example, if the output neuron determines that the probability of the input being a cat is 0.8 and the probability of it being a dog is 0.2, the network will decide that the input is a cat, and generate the output accordingly.

With most popular AI models, once the model is created, it does not learn about its faults, and does not retain information of any kind. Its parameters are fixed when in use. If an update is desired, the model needs to be put through another learning stage, probably including new data––a process called fine tuning.

This is how LLMs achieve a basic form of “memory,” even though the model itself does not retain any information.

The transformer architecture, often appearing as a layer in architectures for natural language processing, is the key component behind LLMs like Chat GPT and many other modern AI models.

The transformer concept was originally designed as a model for language translation tasks in the seminal work “Attention is All You Need” by Vaswani et.al. in 2017.

In that paper, the authors proposed dispensing with traditional recurrent and convolutional neural network designs in favor of a simpler architecture consisting of just two parts: the encoder and the decoder.

The encoder was designed to abstract the input language features, including the role of context in the meaning and usage of words.

For example, the neural network will assign a different meaning to the word “bank” if it appears near words like “mortgage,” “interest,” and “finance” than it will if it appears near words like “cockpit” “pilot,” “turn,” and “aileron.”

Next, the decoder uses this information to decode the information processed by the encoder. The decoder then produces the translation in a generative manner: One word after another––based on the probability of the various alternative words given the context identified by the encoder––until the translation is complete.

Currently, the encoder and decoder transformers are commonly used separately, namely the encoder transformer and the decoder transformer (most popularly known as the generative transformer).

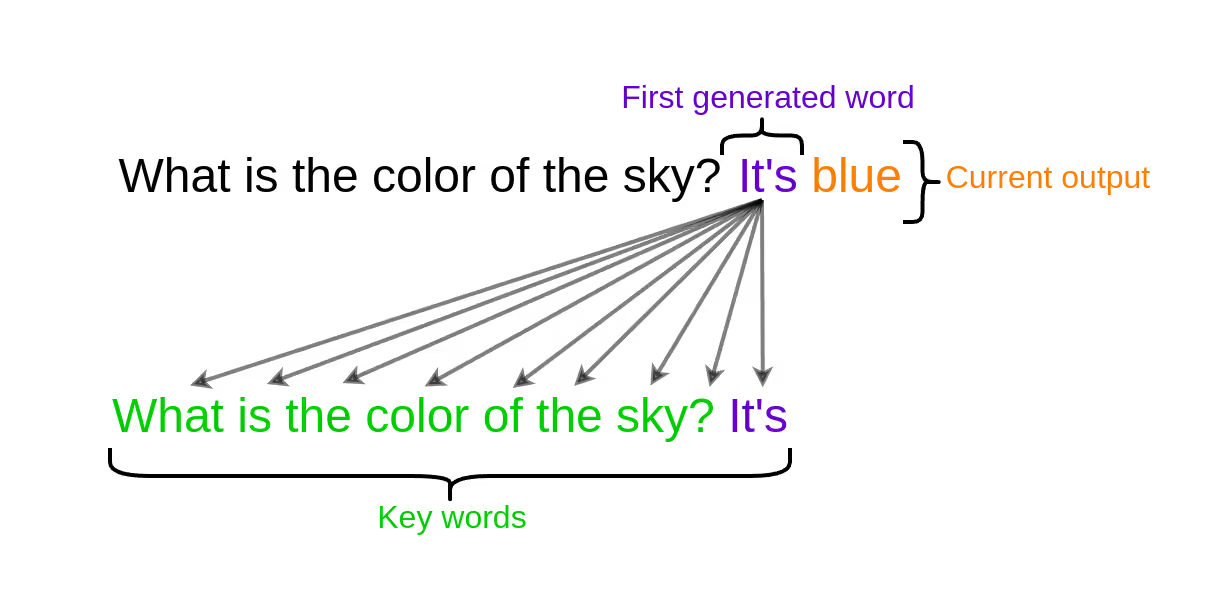

As a matter of fact, the “GPT” in ChatGPT stands for Generative Pre-trained Transformer. This could be the most impressive transformer model, as it is able to generate text given an input sentence. See the the below:

Here, the transformer decoder takes the input query, “what is the color of the sky?”

The encoder knows from hundreds of thousands of sources used to train the model that the output category “color” in the context of “sky” is nearly always blue.

The encoder may assign a lesser probability, say, 20%, to gray, and perhaps less than 1% to red.

The decoder generates the most probable following words, which in turn, in this case, is the answer to the question: Namely, “the sky is blue.”

If the programmer or user sets the threshold to anything less than 80%, the model will consistently generate the output blue. But by adjusting the model’s temperature settings, the user can increase or decrease the model’s tendency to generate the lower-frequency alternatives.

The role of the encoder, although less widely understood or appreciated, is a key component in many natural language processing tasks.

Its duty is to abstract the input language features, including the role of the context in the meaning and usage of words.

Therefore, you need it for things like named entity recognition, sentiment analysis, and many others. For a sentiment analysis example, see the image below.

Although one can find classic models that do the same job with a similar accuracy, the advantage of the transformer encoder is that the same underlying pre-trained model can be easily adapted for many other tasks, using the process of fine-tuning.

This is accomplished by training the model on a smaller and more specialized set of documents, and increasing the weighting of the most relevant types of documents. For example, an LLM can be optimized for legal or medical applications by uploading and training the model on legal and medical texts and other resources, and increasing the weighting of these documents relative to other types of documents.

A prominent example of this is the Bidirectional Encoder Representations from Transformers (BERT) model, published in 2018 by Devlin et.al.

The attention mechanism was first introduced in the context of sentence-to-sentence tasks, such as translation. It served as an additional method to keep track of context, addressing the limitation of capturing long-range dependencies in sequences, which was a challenge for recurrent neural networks (RNNs).

RNNs were the prevalent models before the advent of transformers, they consist of cells with internal states that are updated after each time step (they keep some memory during usage.)

Different forms of processing language.— RNNs process language in a sequential way. They use the internal state (or memory) to guess (and read, during training) the next word in a sentence.

Observe that this is mostly the method that a child who is learning to read uses. The child tries to keep track of the words that he/she already read and tries to retrieve the meaning from them while reading one word at a time.

On the other hand, transformers process all the words in a sentence all at once, using the self-attention mechanism. This is, all words are processed at the same time. Which is most likely how an adult person reads.

Given a sentence, the model computes the so-called attention scores which encode how much attention a word needs to pay to other words in the same sentence, in order to abstract the intricate contextual information of the sentence and the role of each word in it. See the following example:

Query words pay attention to key words, they are the same words but playing a different role.

The image depicts the attention lines of the query word what. The same is done for all the words in the sentence, simultaneously. The attention scores can be used for further processing and to classify or extract features of the sentence in the case of the transformer encoder. With a generative transformer, the abstracted information is used to generate the next word.

It’s worth mentioning that the attention mechanism is used many times in the form of stacked layers: That is, multiple layers of the same type are placed on top of each other within the neural network. These additional layers enhance the model's ability to glean patterns and representations from the data.

The initial layers may identify very basic relationships and patterns. But as the input and training data is fed through subsequent stacked layers, the model’s analysis gets increasingly refined. Each additional stacked layer can work at a higher level of abstraction, and can identify more and more advanced or subtle relationships and dependencies between words.

Internally, the transformer architecture computes three different representations of the input words, namely, the query, key, and value representations.

They each describe the same given word, but play different roles, and are represented by different sets of numerical features.

Query words pay attention to the key words. Such attention is quantified in the attention scores.

The value vectors of a word contain the features that are registered via the attention mechanism.

Finally, the outputs of the transformer are the so-called context vectors: one for each word, containing the numerical features concerning the context. These vectors are weighted sums of the value vectors using the attention scores.

This is the self-attention mechanism, in a nutshell.

Generative transformers are trained to predict the next word of a given sentence. Thus, aside from abstracting the subtleties of language, they use context to predict the most probable next word, one at a time.

In a transformer mechanism, the encoder deals with all the words in the input sentence at the same time.

In contrast, unlike transformer encoders, transformer decoders perform self-attention only using the previous words to predict the next word.

Although this sounds pretty obvious, it’s crucial during the training stage. In the generation process, each new predicted word is used to predict the next one, and so on. See the image below:

Here, the word “it’s” is generated using the previous words. Then the model uses the word “it’s” in the next round to predict “blue.” The process continues until the sentence is complete.

Large language models enabled a quantum leap over the very limited chatbot use cases from just a few years ago. These exponential advances over legacy technologies are facilitated by the following new LLM features and innovations:

As we mentioned above, LLMs like ChatGPT do not retain any information, they do not have any memory.

The chatbot is a fixed AI model. That is, its parameters do not change or adapt while the model is in use. But chatbots themselves do have a rudimentary sort of memory: For each new word they generate, they use the entire conversation history to predict it.

So the chatbot “remembers” every previous prompt in the conversation. Every time you enter a new prompt, the chatbot “reads” the entire conversation again, including your new prompt, to start generating the output, one word at a time.

Therefore, any memory or information is only stored in the history itself. Although this sounds rather inefficient, it’s actually amazing. Think about it: Humans can read a sentence at once, but LLMs can read thousands of words in an instant, and use them to continue writing.

This is why LLMs can generate long and coherent texts.

A breakthrough of the transformer architecture, compared to previous models such as Long Short-Term Memory (LSTM) based models, is the inherent parallelization facilitated by the self-attention mechanism.

The internal representations of words and attention scores can be computed independently of each other. This allows the model training to be performed in parallel, in contrast to recurrent models that process sentences sequentially.

This parallelization is a key feature of transformers, enabling the efficient training of Large Language Models (LLMs) with substantial amounts of data. It enhances the quality and reliability of the model at a relatively low computational cost.

We mentioned word features earlier in this blog post. But what exactly are they?

To answer this, let us delve into a previous revolution in the field of natural language processing: word embeddings.

Before the advent of word embeddings, words were treated as atomic entities—essentially with no internal structure.

Language models for various natural language tasks, such as sentiment analysis or spam detection, relied solely on the probability of words appearing in a given context (e.g. “bag-of-words” models).

But bag-of-words models ignore grammatical structure, word order, and the relationships between words. For bag-of-words models, there’s no subject, no object, and no verb. Modifiers don’t modify anything. What’s important in the bag-of-words model is simply that this bunch of words exists together in the same bag.

This approach is simple, easy to construct, and quickly scaleable, though for limited applications. But it also has obvious limitations, such as massive context loss due to the lack of understanding of interword relationships.

To capture interactions between words, n-gram models were used to group words in pairs or triplets, using the probability of these groups appearing to classify the text.

However, these models also had significant limitations: They could not capture the meaning of words and had a narrow context window.

To address these challenges, the introduction of internal structures for words marked a revolutionary advancement in natural language processing.

In the next section, we will explore what word embeddings are and their significance.

When considering the features to attribute to a word for internal structure, we might initially think in terms of “part of speech,” “animate vs. inanimate,” “gender,” “syntactic role,” and the like.

While these choices may appear suitable at first glance, actually implementing them in a large language model is a monumental task.

Tagging words with these features is a highly complex undertaking, and the outcomes may not be entirely satisfactory. The reason lies in the dynamic nature of word features—they depend on context.

For example, the word “bank” can refer to a financial institution, the side of a river, or the movements of an aircraft while turning.

Also modeling the interaction between words using such features is not clear.

To overcome the cumbersome duty of tagging chunks of texts and individual words depending on the context, a 2013 paper by Tomáš Mikolov and a team of researchers at Google took a radically different approach: They based their work on the idea that words appearing in similar contexts have similar meanings.

The authors proposed two simple yet powerful models for learning word embeddings, called skip-gram and c-bow models. Skip-gram predicts the context words given a target word, while c-bow predicts the target word given the context words. Therefore, such models are self-supervised: They learn from chunks of texts without human intervention.

Once the training is done, the weights of the model are used as the word embedding, in such a way that there is one vector for each word, with dimensions ranging from 50 to 300.

These vectors can be seen now as the internal structure of the words, and are, in fact, memory-efficient representations. Such representations are plainly a bunch of numerical values that are learned from the data. The exact meaning of the values is generally unknown and only makes sense within the whole and relative to other words. But the models certainly capture syntactic and semantic relationships between the words.

Word embeddings marked a significant revolution before transformers. The latter can be seen as a more complex and powerful version of the former, as transformers also produce efficient internal representations, but now also of sentences, paragraphs or any big chunk of text.

Let’s consider the word “Rainbow.”

The duty of the model is to predict the context words given the target word. For example, the natural language processing model will learn that “rainbow” is often surrounded by words like “colorful,” “sky,” “rain,” “light,” and “beautiful.” Therefore, the word embedding of “rainbow” will be a vector that points to the direction of these words in the embedding space.

The image below shows a simplified version of the skip-gram model.

In the skip-gram concept shown, the first layer on the left of the diagram consists of the input neurons (you can think of them as “sensory neurons”).

The layer in the middle contains the hidden neurons.

And the neurons in the rightmost layer correspond to the output neurons with one-hot encoding. A softmax function applied there is the only non-linearity used in this network. This function converts the output neurons into a probability distribution that the output layer uses to predict the output.

So in this example, the weights shown in brown resemble the word vector of the word “Rainbow”.

In the practice, using word embeddings appears simply as the mapping from words to vectors. For instance, the word “king” can be represented as a vector like this:

Such vectors lie in the embedding space, and their meaning only makes sense in relation to other words. For example, the word “king” is similar to the word “monarch” as they appear in similar contexts. Therefore, their distance in the embedding space is small compared to other words like “banana” or “car.”

Word vectors also have the astounding property of capturing semantic and syntactic analogies. For example:

That is, the word “Germany,” minus its capital city, plus the acronym “USA” is a vector close to “Washington-DC.” Thus, capital cities are bunched up in the embedding space and have a similar distance with their country counterparts!

In conclusion, the obscure numbers that represent words are a powerful tool for capturing the multidimensional complexity of words.

Austin Ai helps companies and other organizations improve competitiveness and reduce risk by achieving more independence from outside technology corporations

Why is this important? Two reasons:

First, while the offerings of major tech players are undoubtedly of high quality and reliability in many respects, they are not immune to failures. Over-reliance on any one platform, model, or vendor carries a massive amount of risk.

Failures within any system are inevitable, underscoring the need for service diversification and the establishment of backup resources.

Second, relying exclusively on LLM models through services such as Langchain or Griptape lacks long-term reliability. You simply remain too vulnerable to the risk of changes to the service framework. The risks include outside policy changes or algorithm changes that may significantly impact prompt outcomes and potentially disrupt services or cause brand damage or liability.

It’s imperative to maintain adaptability, and nurture alternatives and backup resources, and to conduct fine tuning to optimize your model for your specific purposes.

Expect the unexpected!

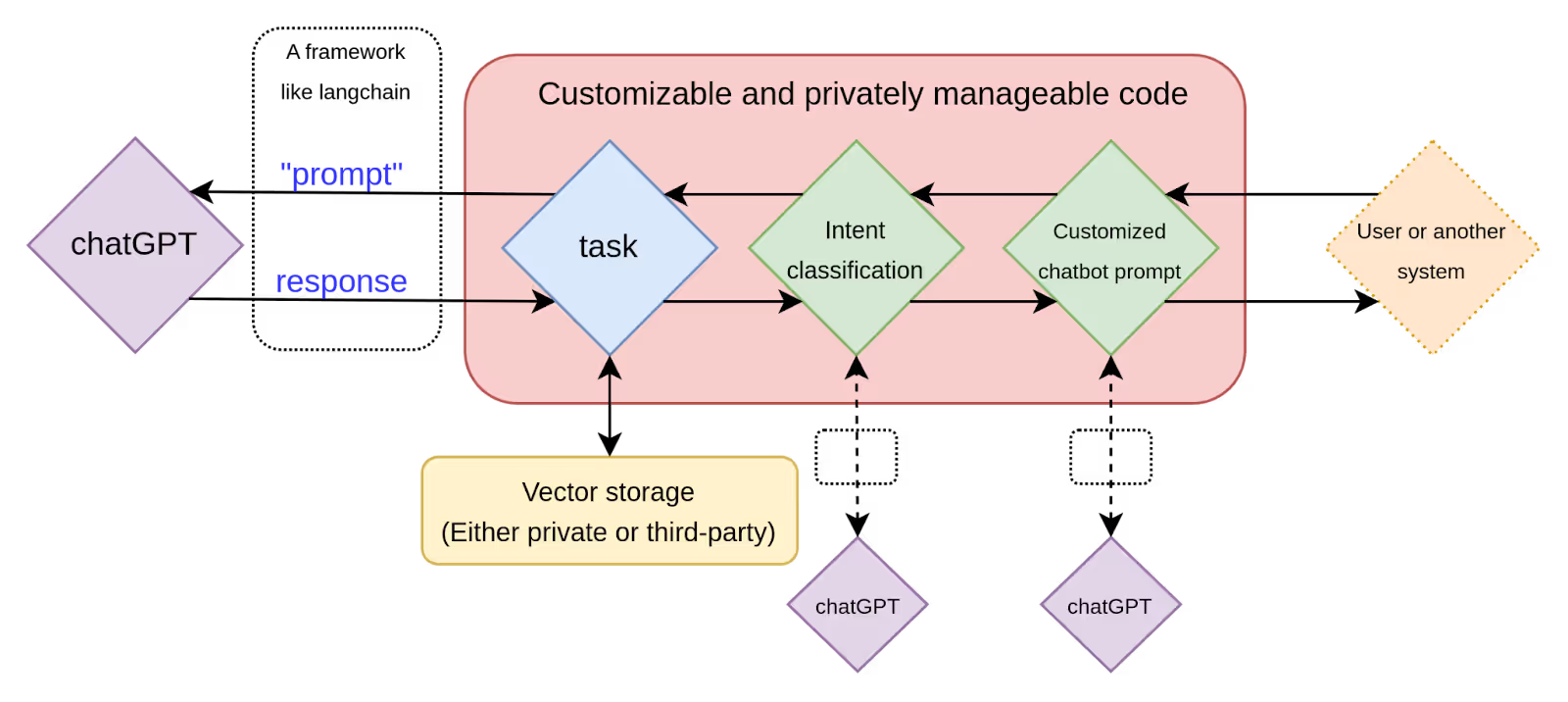

The following scheme depicts a system that relies on a third-party service like ChatGPT for language processing. Here, the service is used as the primary resource for language processing, with the frontend (e.g., a chatbot) receiving queries and sending them to the model for processing.

The model then generates a response, which is sent back to the frontend for display.

In this scenario, part of the code is customizable and privately manageable. But each of those components relies on the continued smooth functioning of ChatGPT or whatever other model-as-a-service vendor is selected.

If the service fails, or the LLM is updated or changed––things over which you have no control––it could disrupt your entire system.

And the LLM will be updated or changed.

Now, utilizing a third-party model-as-a-service like ChatGPT can be a reasonable choice, especially for the initial stages of a project requiring language processing. Leveraging a service such as ChatGPT as the primary resource expedites the early development of your own applications.

However, while ChatGPT can be fine-tuned for specific tasks, it is not entirely customized for them. Meanwhile, you are still faced with the classic SaaS risk: You are completely dependent on someone else’s servers and on their policies that you cannot control, but that they can change on a whim.

For critical applications or services that need a high degree of both customizability and reliability, there’s a better way: Bring the model in-house, entirely under your control.

That’s where Austin Ai comes in: Our expertise in constructing transformer-based models, alongside the capability of fine-tuning LLMs like Llama and operating them on private servers, can help you cease your dependency on third-party services.

This drastically lowers the risk of major and even catastrophic data loss, service disruptions, outside prompt hacking, brand damage from inappropriate outputs, and other threat vectors.

The following scheme illustrates a system where the third-party LLM model is used as a backup resource, with the primary language processing performed by a model tailored to our client’s specific needs.

There’s no “one-size-fits-all” solution. Both approaches have their own virtues and drawbacks.

The best approach depends on each project’s complexity, long-term goals, required reliability, and the time available to design and implement.

At Austin Ai, we work closely with clients to examine your specific use cases, and help you determine the best architecture, combination of technologies, and approach. And we help you design, implement, fine tune, and deploy the solution that makes the most sense for your specific circumstances.

If you’re a manager and your organization is exposed to substantial third-party SaaS/vendor risk, or you’re considering leveling up your intelligent use of data using machine learning or AI technology, we should probably be working together.

To arrange an exploratory meeting and consultation, please contact us here or email us at info@austinai.io.

David Davalos, PhD is an ML Engineer/Dev, Physicist at Austin Artificial Intelligence.