Local Llama Quantized Models vs. ChatGPT Models: In sentiment analysis both families of models perform similarly and suffer from the same drop in performance when including neutral sentiments. For NER and POS tagging, all models have similar problems with miscellaneous entities, except for the ChatGPT 4o.

Performance Stratification and Gaps: For most tasks, there is a clear gap in performance between the best and worst models. Additionally, they tend to stratify according to how aggressive the quantization is. However, less aggressive quantizations do not always perform better; the quantization type plays a crucial role.

NLP Tasks and Prompt Engineering: Large Language Models (LLMs) like Llama can perform a wide range of Natural Language Processing (NLP) tasks through a method called prompt engineering. This is used by tools such as langchain to guide the model towards the desired output.

Complexity of Multi-Class Classification: Language models struggle to classify text into more than two categories, especially when handling neutral sentiments. This happens to ChatGPT too.

NER and POS Tagging: These tasks consist of tagging the tokens of the input text. This is particularly challenging as a deep understanding of context is needed aside from the inherent multi-class classification behind.

The Struggle of Generative Transformers: They are good in tasks focused on the big picture, where subtleties and misunderstandings can be washed out. However, for detailed context-sensitive tasks, the generative nature of transformers struggles with the duty. Here is where encoding transformers shine.

Local Llama Quantized Models vs. ChatGPT Models: In sentiment analysis both families of models perform similarly and suffer from the same drop in performance when including neutral sentiments. For NER and POS tagging, all models have similar problems with miscellaneous entities, except for the ChatGPT 4o.

Performance Stratification and Gaps: For most tasks, there is a clear gap in performance between the best and worst models. Additionally, they tend to stratify according to how aggressive the quantization is. However, less aggressive quantizations do not always perform better; the quantization type plays a crucial role.

Large Language Models (LLMs) have demonstrated remarkable capabilities across a wide range of Natural Language Processing (NLP) tasks, including translation, text summarization, and grammar correction. However, they continue to face challenges with language subtleties that are critical for tasks like Named Entity Recognition (NER) or multi-class sentiment analysis. In this blog entry, we build upon our previous exploration of Llama models in various quantization settings. This time, we focus on how these models perform on benchmarkable NLP tasks across different quantization levels and types, as well as their sensitivity to temperature settings. Additionally, we compare the performance of quantized Llama models to that of ChatGPT models, noting that while most models struggle with complex tasks, the relatively new ChatGPT 4o stands out as an exception. We are confident that you will find our results interesting.

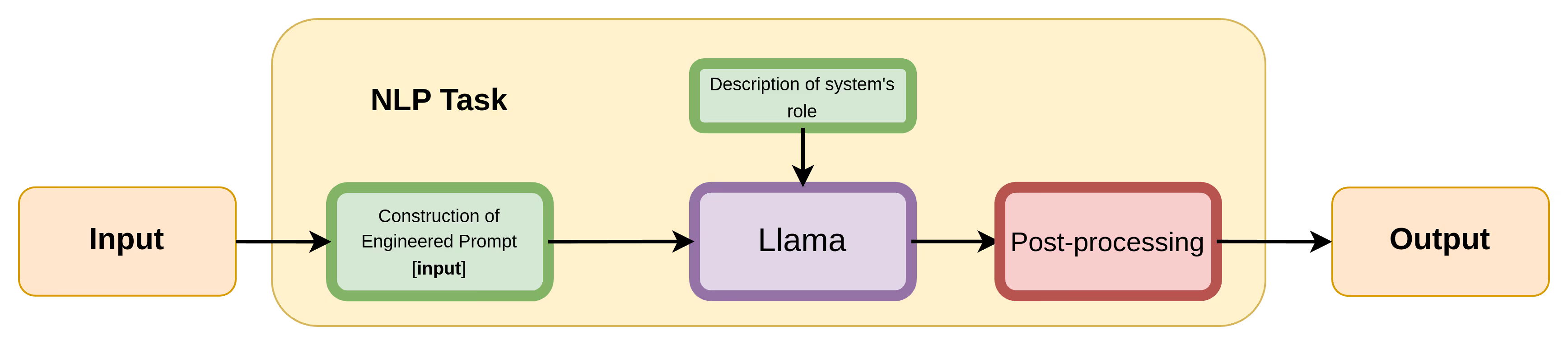

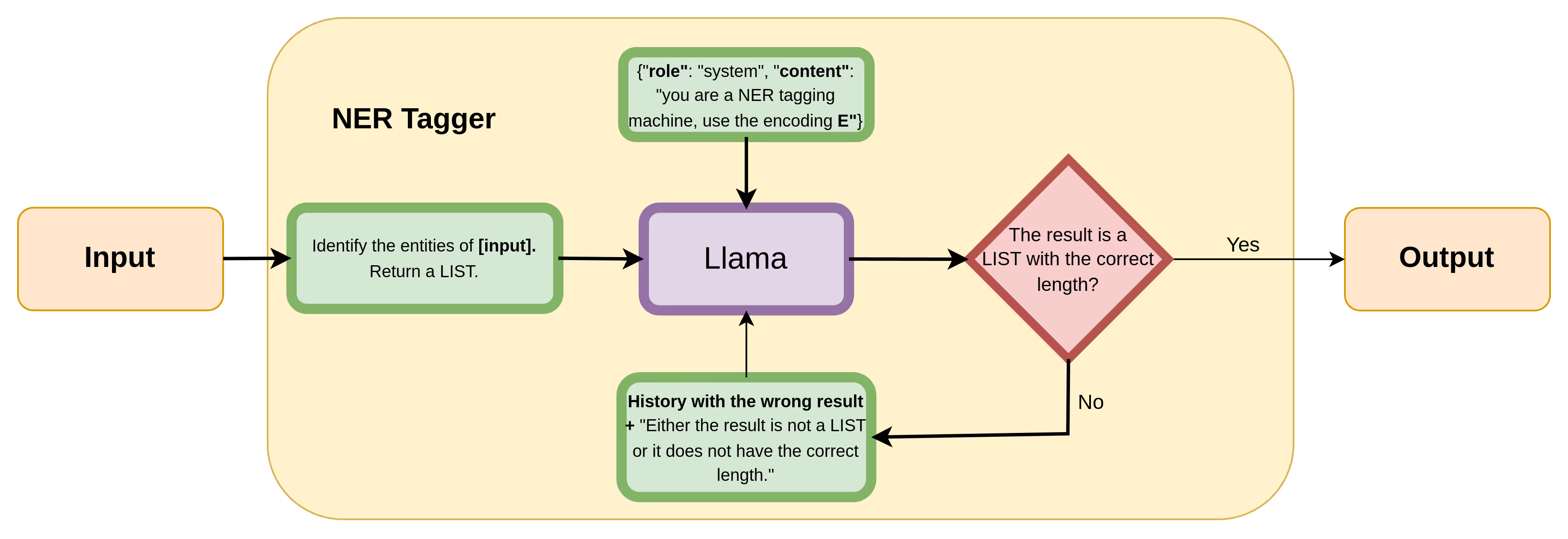

The procedure of using generative pre-trained transformers for specific tasks without fine-tuning is known as prompt engineering. This method involves crafting prompts that guide the model towards producing the desired output. However, this is only part of the process, as further post-processing is typically required to make the result usable. The following figure illustrates the workflow of a machine performing a specific NLP task using Llama or another LLM. The model is “programmed” by assigning it a specific role, and the inputs to this “program” are carefully engineered prompts created after parsing the input data. The model’s output is then post-processed. For example, in the case of NER, the output might be a list of entities encoded as a string, which often needs additional processing to extract meaningful information.

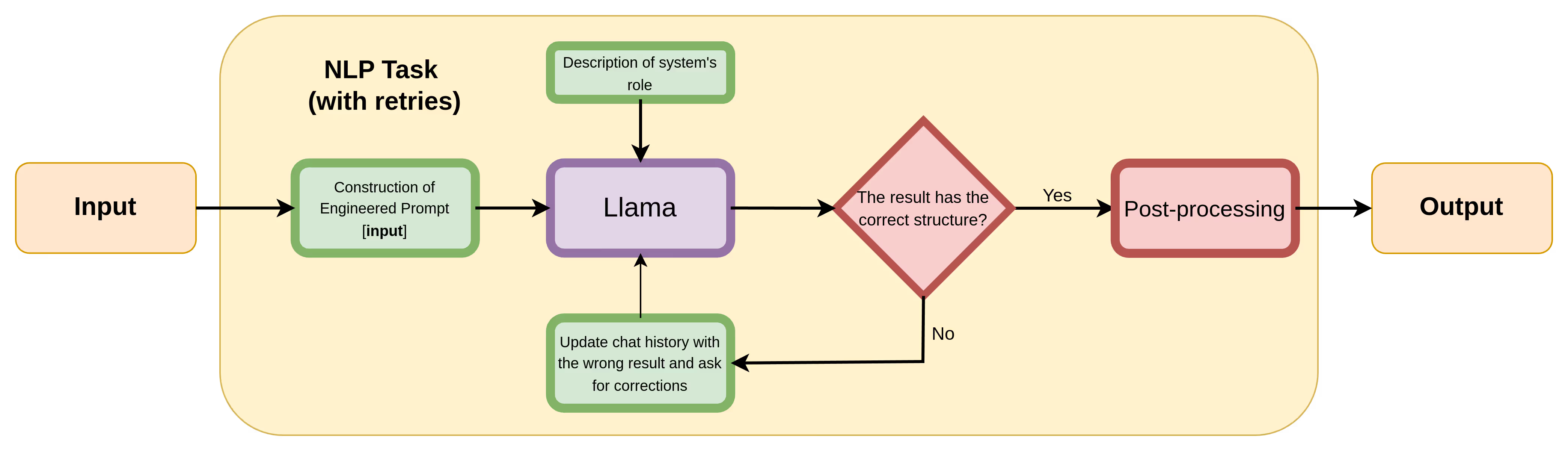

In this simplified example, we assume that the output is already acceptable in the sense that it is ready to be post-processed. However, the model might return a list of entities in an incorrect format or even something like “Hey, here is your NER analysis:…”. Whether such output is considered acceptable depends on the details of the post-processing step. In this work, we classify the latter as unacceptable outputs and prompt the model again, providing feedback on its possible mistakes and requesting a corrected response.

The following figure presents a more detailed workflow, which includes a retry mechanism. This step allows the process to be repeated a number of times until the model provides the correct output. In addition to prompt engineering and post-processing, this iterative retry step is a crucial part of our approach.

We employ this approach throughout our implementation.

The parameters relevant to our analysis are the temperature and the context window. On one hand, temperature controls the randomness in the model’s outputs, affecting its “creativity” and the diversity of predictions. We explored its impact by performing the same experiments across different temperatures, as discussed in detail below. On the other hand, the context window determines the maximum amount of text the model can process and consider in a single query, which is particularly important for tasks requiring an understanding of broader contexts or long prompts.

Llama 3.1 models feature an impressive context window of up to 128k tokens, enabling them to manage extensive inputs and capture long-range dependencies in text data. However, choosing a larger context window increases the memory requirements, which can affect execution on local installations. The importance of these parameters varies depending on the specific NLP task.

To better understand the role of temperature, let us first discuss the activation function used in the final step of language models (and in many other models that handle probabilities): the softmax function. Its role is to take the raw outputs of the model (called logits) and convert them into values that can be interpreted as probabilities. The softmax function is defined as follows:

where the zi are the raw outputs. The model’s final outputs are pi = softmax(zi), representing the probabilities of the model predicting the i-th word. Notably, the softmax function is one of the few activation functions that depend on all the model’s outputs. This is crucial, as probabilities must be normalized to sum to 1.

Next, an interesting concept borrowed from physics is the temperature. The temperature T is a parameter that controls the entropy of the model’s predictions for the final distribution—or, more simply, its randomness. It is introduced into the softmax function as follows, mimicking the Boltzmann distribution:

For T = 0, the softmax function becomes the argmax function, which always selects the word with the highest probability. As T increases, the probabilities of all words become more similar, leading to more diverse predictions. It is worth mentioning that the ranking of the words remains the same; only the probabilities change.

For NLP tasks that do not require building up a large chat history, maintaining a small context window is ideal to save memory, as it is typically pre-allocated. Although the model parameters are fixed, each head within each transformer multi-head layer constructs an attention matrix of size n × n, where n is the context window. For an introductory overview of the self-attention mechanism, we recommend reading this blog post.

By default, llama.cpp reserves memory for a context window of 2048 tokens. This was sufficient for us for all tasks except POS tagging. For this task, the prompt length required to provide the model with enough context to correctly tag the words was too long. In this case, we increased the context window to 4096 tokens, which was sufficient to process almost all samples.

When “programming” LLMs to perform NLP tasks or to emulate specific roles (e.g., a chatbot constrained to a particular domain), the system role establishes the model’s directives. For instance:

{"role": "system", "content": "You are a sentiment classification machine. Return 0 for NEGATIVE and 1 for POSITIVE. Return only the integer and nothing more."}

As part of prompt engineering, a user role can be added to interact with the system, providing input data. For example:

{"role": "user", "content": "I loved the movie, it was amazing!"}

The system then processes the user’s input and returns the desired output. This method was used for sentiment analysis tasks throughout this work.

However, this approach proved ineffective for Named Entity Recognition (NER) and Part-of-Speech (POS) tagging tasks for reasons that remain unclear. For these tasks, we incorporated both the system role and the query within the system content itself. For instance, for NER:

{"role": "system", "content": f"You are a named entity recognition machine that returns ONLY a list where each token (word) in the sentence is associated with its corresponding integer tag. The encoding is: {ENTITY_ENCODING}. 'B-' indicates the beginning of an entity, 'I-' continues the same entity, and 'O' is used for non-entities. Here’s an example of what you should output: Example: For the input sentence [I live in New York], you should return ONLY: [('I', 0), ('live', 0), ('in', 0), ('New', 5), ('York', 6)]. Now perform the NER tagging for the sentence [{text}] and return ONLY a LIST of TUPLES, nothing more."}

Providing the query via the user role yielded worse results in early tests compared to embedding the query directly in the system role.

For simple classification tasks, such as sentiment analysis, the output is typically a single integer or value (e.g., 0 or 1, “positive” or “negative”). Due to its simplicity, the model generally performs well at following the prompt and producing accurate results.

However, for more complex tasks like NER or POS tagging, requesting a list of integers representing the entity encodings or tags resulted in poor outcomes. Even for short sentences, the generated lists often had incorrect lengths.

To overcome this issue, we modified the output format to a list of tuples, where each tuple pairs a word with its corresponding tag. This format compels the model to match the correct sentence length, ensuring alignment between the input and output, and facilitates smooth post-processing.

During the post-processing, if after a number of attempts the model fails in one way or another, it returns NaN as the output. This allows us to identify the cases where the model is unable to provide a correct answer, or an answer at all.

Since we explored only classification tasks and our objective is to compare the performance of different models and quantizations, confusion matrices and F1 scores were sufficient for our analysis. These metrics allow us to measure how well the models perform in classifying data into predefined categories, providing insights into their precision, recall, and overall effectiveness.

The confusion matrix is a table that summarizes the performance of a classification model by displaying the counts of true positives, true negatives, false positives, and false negatives. It offers a clear picture of where the model excels and where it struggles, helping to identify specific types of errors.

The F1 score is computed with the information provided by the confusion matrix. It is defined as follows:

where precision is the proportion of correctly predicted positive instances out of all predicted positives:

and recall is the proportion of correctly predicted positive instances out of all actual positives:

The F1 score is interpreted as a balance between precision and recall, with a value of 1 representing perfect precision and recall, and 0 indicating that the model failed entirely.

We use the collection of quantized Meta’s Llama 3.1 models with 70B parameters, available here, as well as Nvidia’s Nemotron 70B, which is based on Llama 3.1. The quantizations were created using llama.cpp, and we also use llama.cpp to run the models locally.

Details about the machine used to run these models, along with an analysis of other tools for running Llama models locally, can be found in our previous post. The quantization nomenclature can be found here and here. Also, a fantastic visual guide is available here.

Binary sentiment classification is a common NLP task that involves categorizing text into two classes: positive and negative. This is the simplest form of sentiment analysis and the simplest task analyzed in this work. Moreover, the results will serve as a reference for understanding the behavior of the models when performing more complex tasks.

To benchmark the models, we used the Rotten Tomatoes movie reviews dataset, which contains reviews labeled as either positive or negative.

In the following two figures, you will find the results of the binary sentiment classification task using several Llama 3.1 70B quantizations. We also computed the same benchmark using four ChatGPT models for comparison. The F1 score is plotted against the temperature, with the temperatures used being T = 0.0, 0.5, 1.0, 1.5 for all models. For each point we computed the F1 score for each outcome type using 100 samples from the dataset.

This task turned out to be quite interesting and challenging. The models struggled a lot more to classify text into three categories: positive, negative, and neutral. To benchmark the models, we used this dataset. The prompt was simply modified to include the three classes with their corresponding encoding.

Similarly to the previous section, in the following two figures, you will find the results of the multi-class sentiment classification task using several Llama 3.1 70B quantizations. We also computed the same benchmark using four ChatGPT models for comparison. The F1 score is plotted against the temperature, with the temperatures used being T = 0.0, 0.5, 1.0, 1.5 for all models. For each point we computed the F1 score for each outcome type using 100 samples from the dataset.

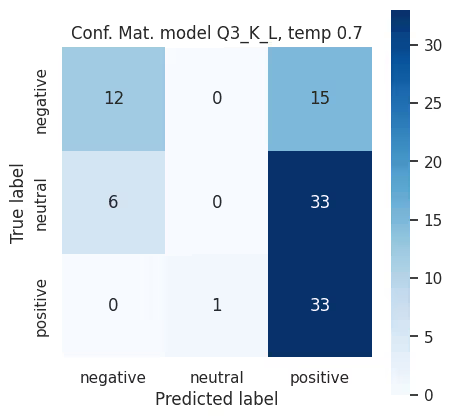

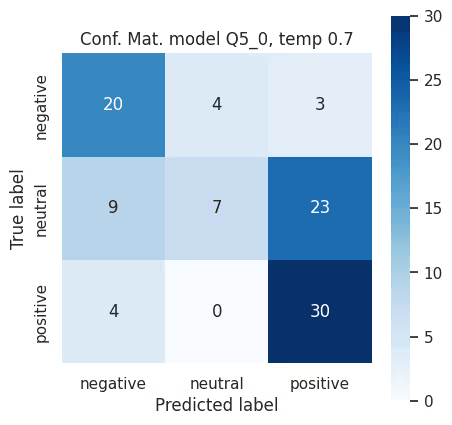

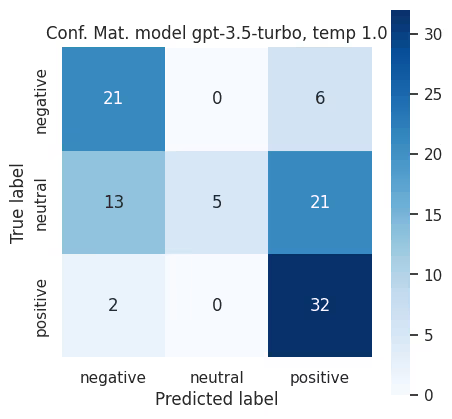

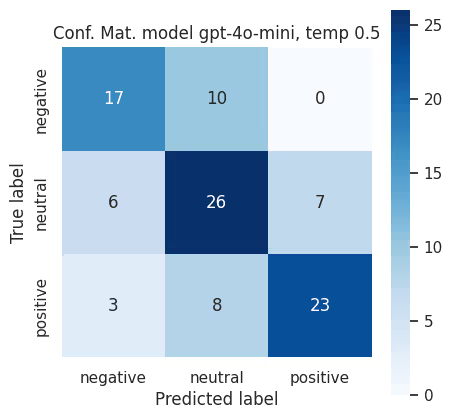

You can observe that there is a big drop in performance respect to binary sentiment classification, even for ChatGPT 4o. To understand what is happening in more detail, we computed the confusion matrices for the best and worst (both Llama and ChatGPT) performing models in this task.

Confusion matrices for multi-class sentiment classification using Llama 3.1 Q3_K_L quantization (top), the worst-performing model among Llama variants, and Q5_0 quantization (bottom), the best-performing model, both at a temperature of 0.7, the model struggles to accurately classify neutral reviews.

Confusion matrices for multi-class sentiment classification using GPT-3.5-turbo with temperature 1.0 (top), the worst-performing model among ChatGPT variants, and GPT-4o-mini at temperature 0.5 (bottom), the best-performing model. GPT-4o-mini performs better with the neutral tag but now struggles with the negative tag.

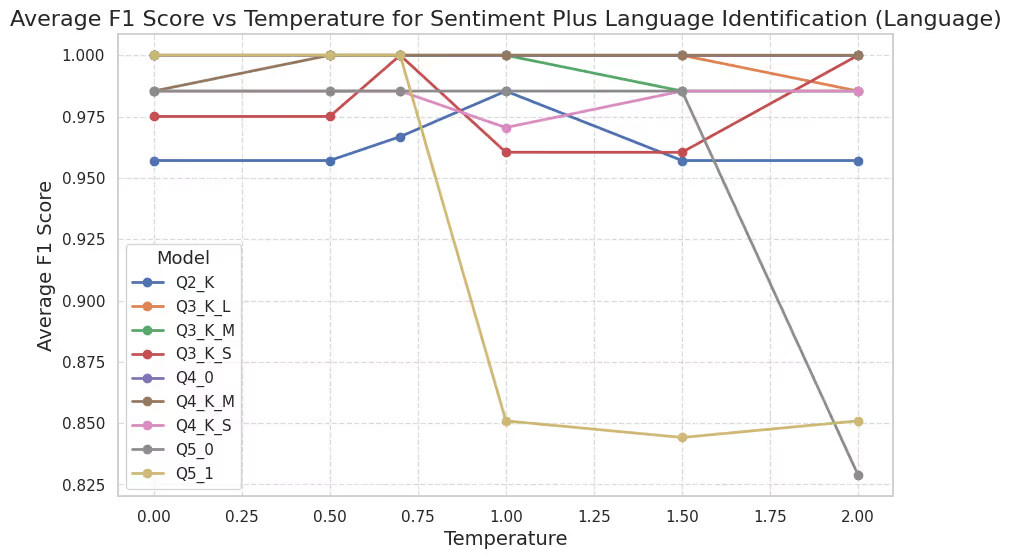

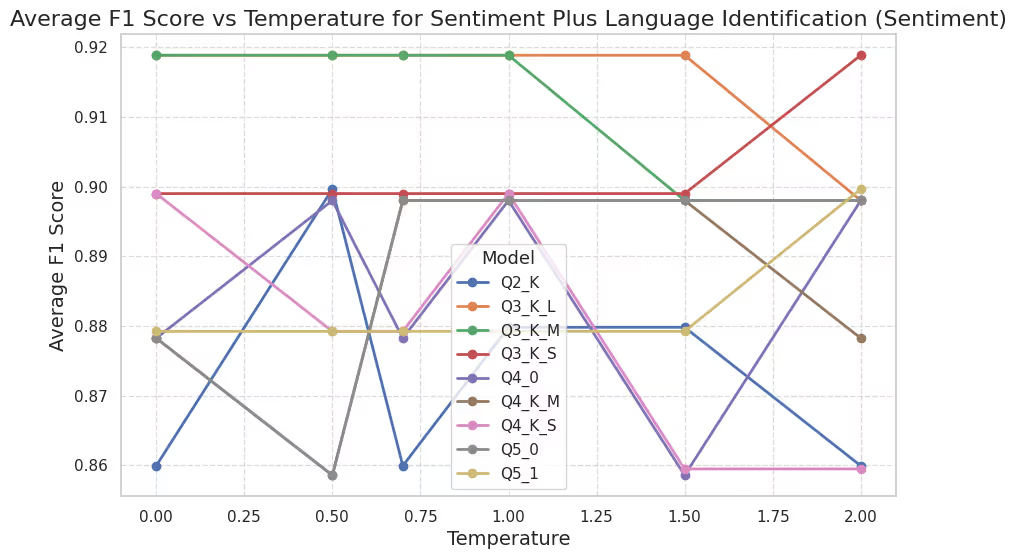

In this section, we combined sentiment analysis with language identification to create a more involved task, nonetheless, each individual task is still relatively simple. The sentiment analysis is binary and the language identification is multi-class, including English, Japanese, German, French, Spanish, and Chinese. The dataset used for this task is the multilingual amazon reviews.

The prompt is engineered to return a dictionary with the sentiment and language as keys. Then, the post-processing consists of simply extracting the dictionary and the data within. Additionally, unlike the pure sentiment classification tasks, we wrapped the entire prompt inside the system role.

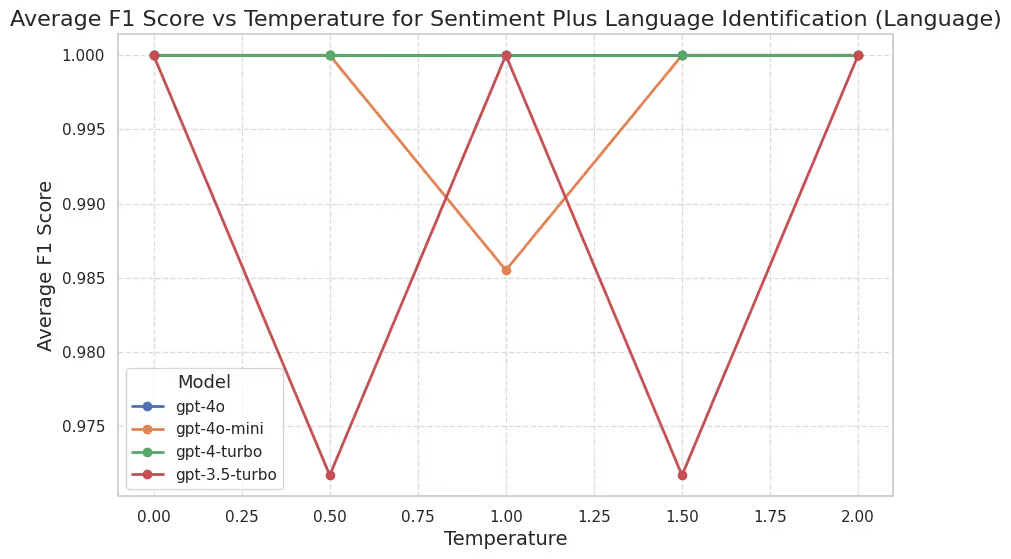

Similarly to the previous section, in the following two figures, you will find the results of the sentiment+language identification task using several Llama 3.1 70B quantizations. We also computed the same benchmark using four ChatGPT models for comparison. The F1 score is plotted against the temperature, with the temperatures used being T = 0.0, 0.5, 1.0, 1.5 for all models. For each point we computed the F1 score for each outcome type using 100 samples from the dataset.

In this section we discuss the results of two very complex tasks: Named Entity Recognition (NER) and Part-of-Speech (POS) tagging. These tasks require a deep understanding of the text and its context, making them particularly challenging for generative transformers. We use conll2003 database for both tasks. Below you will see a scheme depicting the workflow used for NER (and POS) tagging.

Additionally, both NER and POS tagging use BIO encoding, which stands for “beginning,” “inside,” and “outside”.

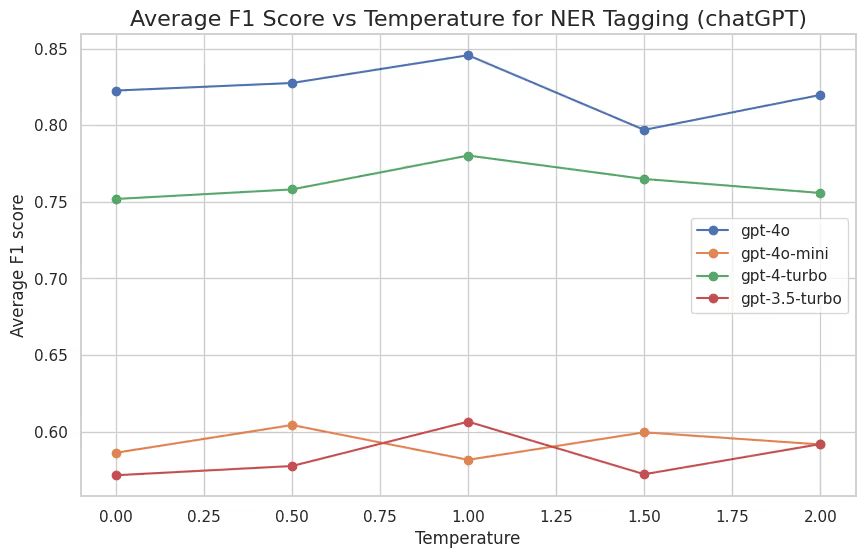

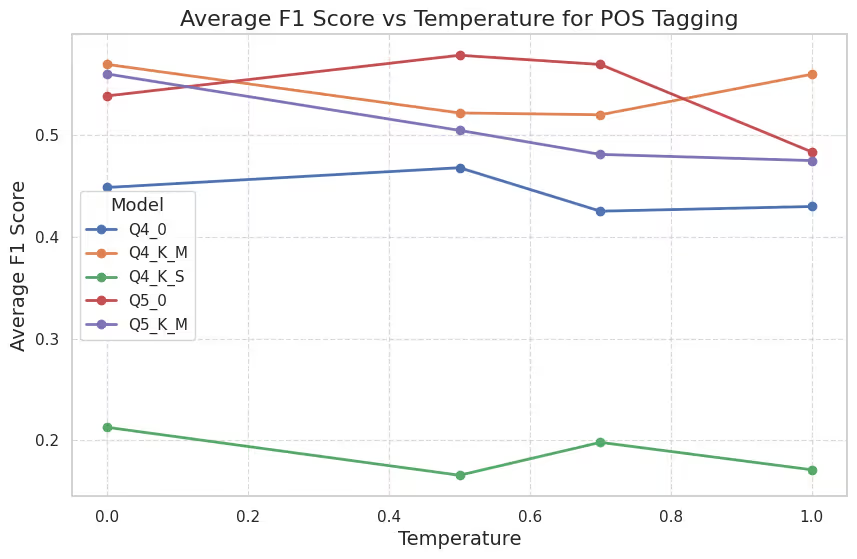

In what follows we present the results for NER first, for Llama and ChatGPT models, and then for POS tagging with a similar structure. The F1 score is plotted against the temperature For each point we computed the F1 score for each outcome type using 100 samples from the dataset.

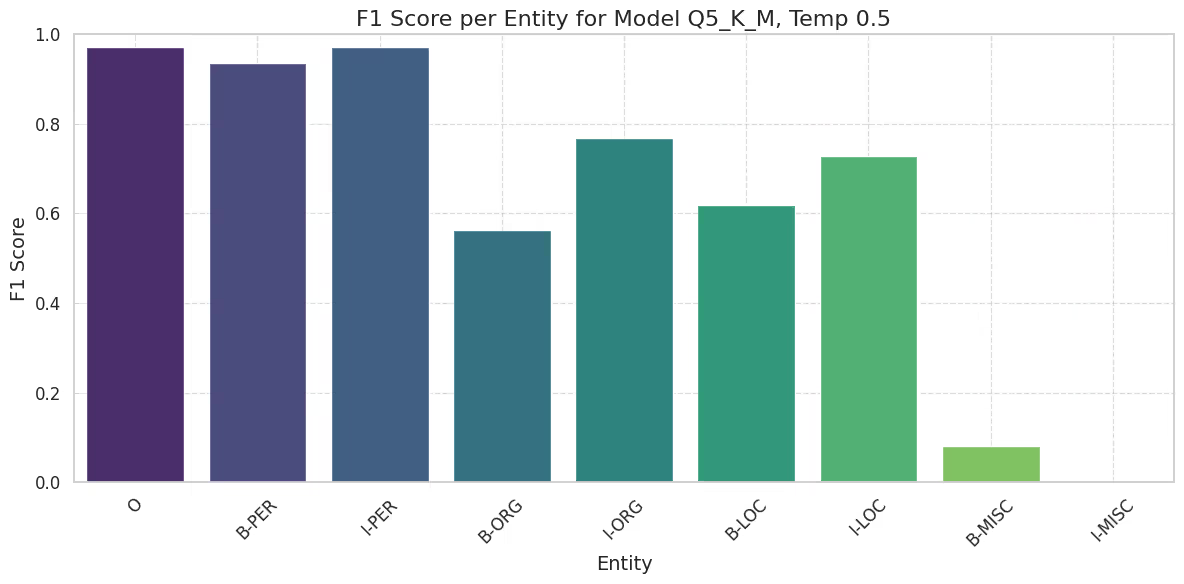

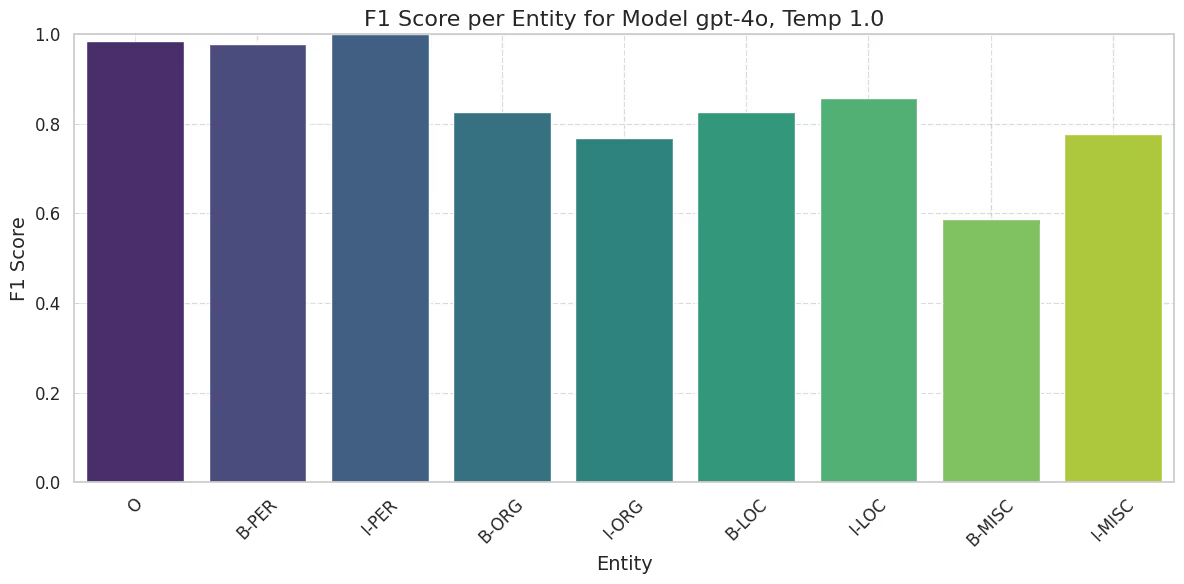

You can observe that all models struggle with this task and a similar gap in performance is present. To understand what is happening, let us look at the F1 scores per entity and the confusion matrix of the Q5_K_M model, which is the best-performing model in this task.

The model basically performs quite decently with person entities but fails totally with miscellaneous entities. True I-MISC predicted labels are spread across other entities and B-LOC is often confused with B-ORG.

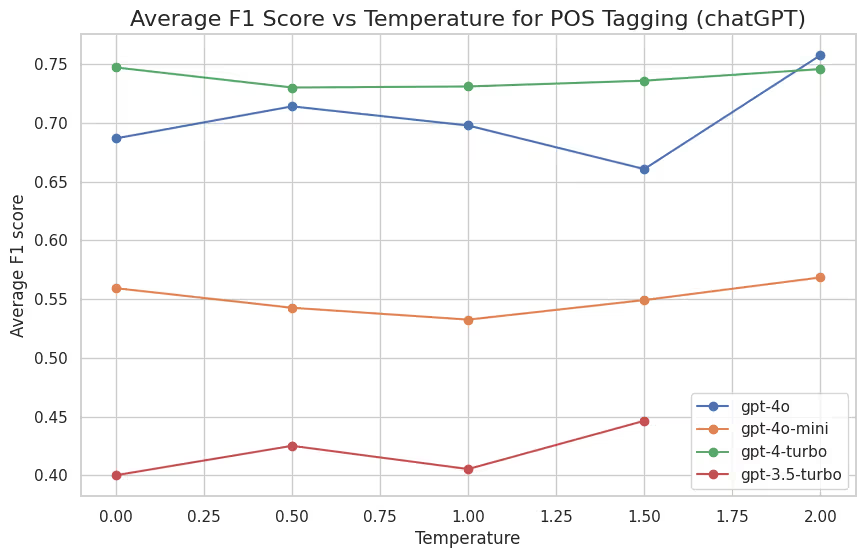

Now let’s analyze ChatGPT models.

Models gpt-4o and gpt-4-turbo perform significantly better than gpt-3.5-turbo and gpt-4o-mini. Nonetheless, the latter two models perform quite similarly to the best Llama models. Let’s take a closer look at the performance differences between gpt-4o and gpt-3.5-turbo in detail.

The model gpt-3.5-turbo performs quite similar to the best Llama model, struggling with miscellaneous entities too. This problem is almost cured with gpt-4o.

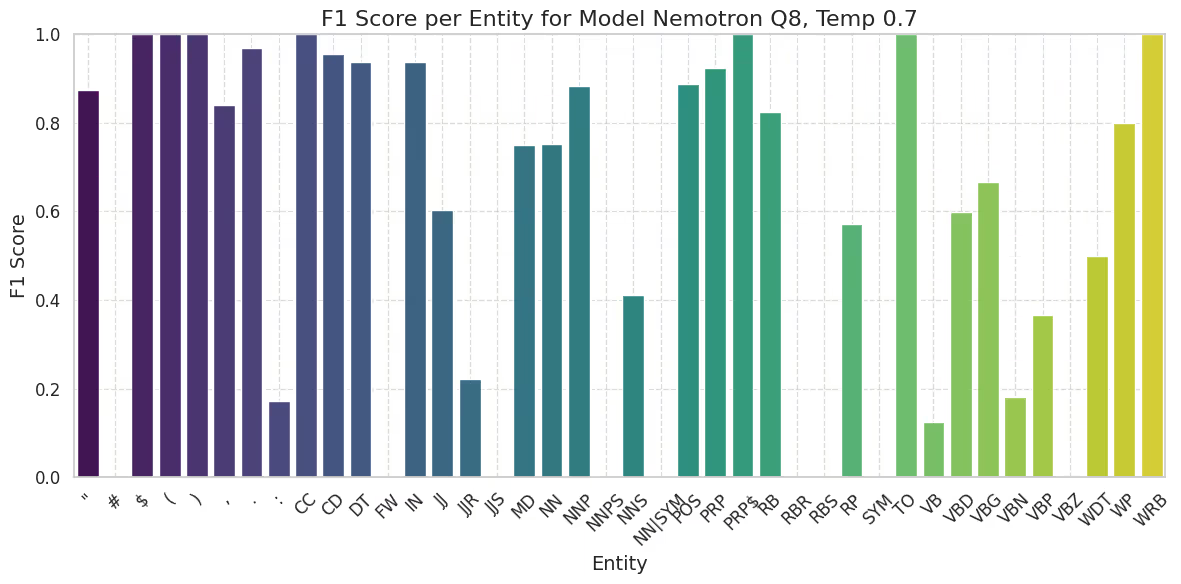

Find below the results for POS tagging with a similar setup as NER tagging, but this time we plot only those models that passed successfully the post-processing step. The size of the sample is 20, but some models struggled to even give a single correct output. Thus, we selected those models that successfully tagged at least 11 samples.

The relatively small sample size is due to the increased context window required for POS tagging, which led to higher memory requirements and computational time. Consequently, a more thorough analysis was conducted with the largest models, Nemotron and Llama Q8_0, in the next section.

The results of POS tagging with Llama are less promising than those of NER tagging. It is worth mentioning that the models could struggle more with POS tagging due to the increased size of the prompt. To ensure that the model had the whole context, we provided two dictionaries: one for the encoding and one for the meaning of each abbreviation of the tags.

NER and POS tagging are particularly challenging because they require an understanding of both the semantics and context of words. For instance, NER involves recognizing names of people, organizations, locations, or even dates and categorizing them correctly. This task becomes complex when dealing with ambiguous words, such as “Apple,” which could refer to the fruit or the company, depending on the context. Similarly, POS tagging assigns grammatical categories—such as nouns, verbs, and adjectives—to words, requiring the model to grasp sentence structure and syntax.

Generative language models, like GPT, often struggle with these tasks because their primary design focuses on generating coherent and contextually relevant text rather than labeling or classifying individual components. These models use a mask in the attention matrices to process language in a left-to-right or auto-regressive manner, predicting the next word based on the previous ones. This approach can lead to issues when trying to predict the correct labels for words that depend on context across an entire sentence.

NER demands a high level of precision and contextual understanding, especially when identifying rare or domain-specific entities. For example, recognizing technical jargon in legal or technical texts requires specialized knowledge. Additionally, entities can overlap or appear in nested forms, adding another layer of complexity.

To deal with these tasks, it is better to process text in both directions to capture the full context of a sentence. After all, the masking is not necessary to classify entities or parts of speech, we do not care about the causal relationship between words, only their relationships. This is where bidirectional transformer models like BERT (Bidirectional Encoder Representations from Transformers) shine. Unlike generative models, BERT reads entire sentences simultaneously, capturing context from both directions. This bidirectional nature allows the model to consider all words in a sentence before assigning labels, making it highly effective for tasks like NER and POS tagging. Moreover, BERT does not have to actively care about the length of the sentence. Additionally to BERT, there are other models based on slightly different self-attention mechanism that handle differently the position embedding, like TENER.

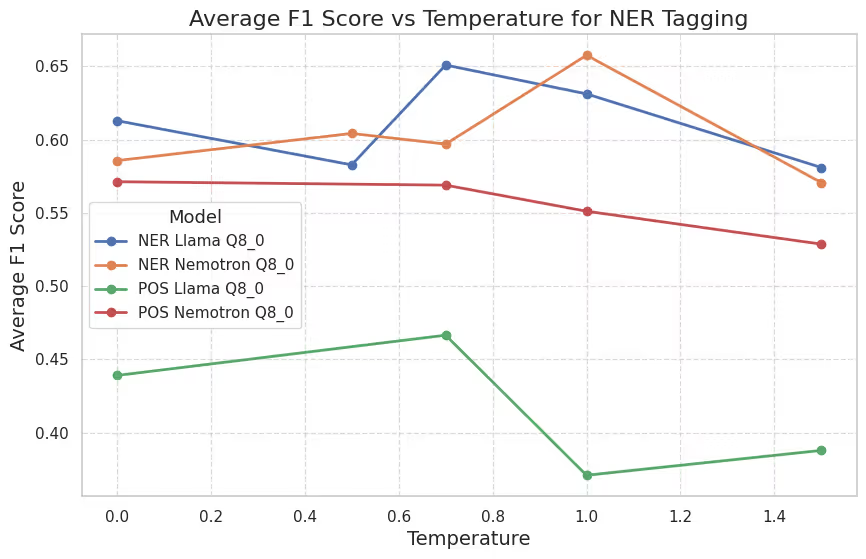

To finish the analysis, we used larger models for the most complex tasks, namely Llama 3.1 Nemotron 70B Instruct Q8_0 and Llama 3.1 Q8_0. They are larger in the sense that the quantization is less aggressive. We ran the same NER and POS tagging benchmarks with these models to compare their performance with the smaller quantizations. The results are presented below.

Now let’s look in detail the F1 scores for NER and POS tagging for Nemotron and Llama Q8_0, which emerged as the best model in the previous section, although for different temperatures.

Throughout this post, we have explored various NLP tasks, including NER, POS tagging, sentiment analysis, and language classification, each with its own unique challenges and opportunities. While generative transformers like Llama have shown promising results in some areas, tasks such as POS tagging and NER highlight the complexities that still need to be addressed for effective real-world deployment. Notably, only in NER and POS tagging did ChatGPT 4o demonstrate a significant performance advantage over Llama models and older (or smaller) ChatGPT versions.

At Austin Ai, we believe that the current landscape of NLP technologies offers immense room for improvement. By integrating state-of-the-art advancements in transformer models with tailored engineering solutions, we strive to bridge the gap between academic innovation and practical implementation. For example, we have developed and studied our own NER tagging models based on TENER and oriented to specific domains, which offer enhanced performance for specific use cases. Additionally, the advent of open-source models with capabilities comparable to ChatGPT 4o represents another exciting avenue for advancement, providing greater accessibility and customization for diverse applications.